You are not logged in.

- Topics: Active | Unanswered

#701 2013-11-09 18:05:21

- empie

- Member

- From: The Netherlands

- Registered: 2013-06-15

- Posts: 9

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Try using "-cpu qemu64" in stead of "-cpu host". That solved my issues.

Offline

#702 2013-11-09 18:18:29

- cmorrow

- Member

- Registered: 2013-11-09

- Posts: 2

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Changing the -cpu option worked. Thanks!

Offline

#703 2013-11-11 03:03:06

- mafferri

- Member

- Registered: 2013-11-11

- Posts: 7

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi,

thanks for this thread! Using this setup for a couple of years under XEN - but with KVM and VFIO its easier and - the most important part - more stable (with xen the Guest-Reboot was not always successful - with KVM it is!).

Anyways i converted most of the manual qemu options into libvirt-xml - main reason was that libvirt handles vCPU pinning and i couln't find a way to do it manually.

Another positive effect is Host Startup/Shutdown handling due to the libvirt init script - they stop/start your Guest on Host startup/shutdown.<domain type='kvm' id='26' xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'> <name>windows7</name> <uuid>xxxxxxx-xxxxx-xxxxx-xxxx-xxxxxxxxx</uuid> <memory unit='KiB'>8388608</memory> <currentMemory unit='KiB'>8388608</currentMemory> <memoryBacking> <nosharepages/> <locked/> </memoryBacking> <vcpu placement='static'>4</vcpu> <cputune> <vcpupin vcpu='0' cpuset='2'/> <vcpupin vcpu='1' cpuset='6'/> <vcpupin vcpu='2' cpuset='3'/> <vcpupin vcpu='3' cpuset='7'/> <emulatorpin cpuset='0-1,4-5'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-q35-1.5'>hvm</type> <boot dev='hd'/> <smbios mode='host'/> </os> <cpu mode='host-passthrough'> <topology sockets='1' cores='2' threads='2'/> </cpu> <clock offset='localtime'/> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <devices> <emulator>/usr/local/kvm/bin/qemu-system-x86_64</emulator> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='none' discard='unmap'/> <source dev='/dev/ssd/win7system'/> <target dev='vda' bus='virtio'/> <alias name='virtio-disk0'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x03' function='0x0'/> </disk> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='none' discard='unmap'/> <source dev='/dev/mapper/win7games_cached'/> <target dev='vdb' bus='virtio'/> <alias name='virtio-disk1'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x04' function='0x0'/> </disk> <controller type='sata' index='0'> <alias name='sata0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/> </controller> <controller type='pci' index='0' model='pcie-root'> <alias name='pcie.0'/> </controller> <controller type='pci' index='1' model='dmi-to-pci-bridge'> <alias name='pci.1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </controller> <controller type='pci' index='2' model='pci-bridge'> <alias name='pci.2'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x01' function='0x0'/> </controller> <controller type='usb' index='0' model='none'> <alias name='usb0'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x01' function='0x0'/> </controller> <interface type='bridge'> <mac address='00:xx:xx:xx:xx:xx'/> <source bridge='br0'/> <target dev='vnet0'/> <model type='virtio'/> <alias name='net0'/> <rom bar='off'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x02' function='0x0'/> </interface> <memballoon model='none'> <alias name='balloon0'/> </memballoon> </devices> <seclabel type='none'/> <qemu:commandline> <qemu:arg value='-device'/> <qemu:arg value='ioh3420,bus=pcie.0,addr=1c.0,multifunction=on,port=1,chassis=1,id=root.1'/> <qemu:arg value='-device'/> <qemu:arg value='vfio-pci,host=08:00.0,bus=root.1,addr=00.0,multifunction=on,x-vga=on'/> <qemu:arg value='-device'/> <qemu:arg value='vfio-pci,host=08:00.1,bus=root.1,addr=00.1'/> <qemu:arg value='-device'/> <qemu:arg value='vfio-pci,host=00:1d.0,bus=pcie.0'/> <qemu:arg value='-device'/> <qemu:arg value='vfio-pci,host=00:1d.1,bus=pcie.0'/> <qemu:arg value='-device'/> <qemu:arg value='vfio-pci,host=00:1d.2,bus=pcie.0'/> <qemu:arg value='-device'/> <qemu:arg value='vfio-pci,host=00:1d.7,bus=pcie.0'/> <qemu:arg value='-bios'/> <qemu:arg value='/usr/src/kvm/seabios-1.7.2-patched/seabios/out/bios.bin'/> <qemu:env name='DISPLAY' value=':0'/> <qemu:env name='QEMU_PA_SAMPLES' value='128'/> <qemu:env name='QEMU_AUDIO_DRV' value='pa'/> </qemu:commandline> </domain>Adapt UUID and MAC adress - and of course your PCI-IDs...

Greetings

K.

Thank

I forgot (alias name=) -.-'

But thanks to all of you to be able to do what I wanted to do 15 years ago

I would like to give my contribution to the cpu AMD FX, are able to work well with the double of the processes with respect to the number of cores

I have a fx 4100 will dedicate 3 cores for the guest but they will setup with hyper-treading

How distro I'm using gentoo

Offline

#704 2013-11-11 22:09:13

- aw

- Member

- Registered: 2013-10-04

- Posts: 921

- Website

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Testing a Linux guest w/ a GTX660, I can confirm that things go bad when using the NV_RegEnableMSI=1 option. This worked fine for me with a Quadro card. I see a single interrupt and the monitor goes out of sync and never comes back after OS bootup. I'll try to find some time to dig into it. I also note that the audio driver specifically checks for nvidia devices and blacklists MSI on snd-hda-intel, so at some point nvidia hasn't played nicely with Linux MSI support. Perhaps there's a reason why the Linux nvidia driver defaults to INTx as well.

Good news/bad news...

Good news: I figured out the problem and posted a patch that solves it on my GTX660: http://lists.nongnu.org/archive/html/qe … 01390.html

Bad news: This means that for every MSI interrupt, the Nvidia driver does a write to MMIO space at an offset that QEMU needs to trap in order to "virtualize" the device. That may negate any performance benefit of MSI vs INTx. As noted in the patch we might be able to use an ioeventfd to make handling more asynchronous, but the overhead is probably still more than INTx, which we can handle without exiting to QEMU userspace.

http://vfio.blogspot.com

Looking for a more open forum to discuss vfio related uses? Try https://www.redhat.com/mailman/listinfo/vfio-users

Offline

#705 2013-11-12 01:33:29

- mafferri

- Member

- Registered: 2013-11-11

- Posts: 7

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

kaeptnb wrote:oh i overread the main part: you can sucessfully run qemu as a user manually!

that never worked for me, when i startet qemu as a user i got the same or similar messages like you.Yup, that works fine. It just required to chown the /dev/vfio devices and the hugetlbfs area.

Having it in libvirt would be cosmetic. I have some LXC containers managed with libvirt, so this would put everything in a unique interface. But libvirt causes me a headache, no idea where it takes the RLIMIT_MEMLOCK value from. It seems to ignore limits.conf

I have the same problem , and change the user and group in the root on /etc/libvirt/qemu.conf and libvirtd.conf work fine no output the error -.-'

the same problem occurs even if you launch qemu with "sudo-u user qemu ......"

Offline

#706 2013-11-12 02:18:52

- a123

- Member

- Registered: 2013-11-05

- Posts: 2

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi all, I can passthrough my VGA to VM successfully using both pci-assign and vfio-pci. What's the difference between these two methods?

Offline

#707 2013-11-12 02:30:23

- aw

- Member

- Registered: 2013-10-04

- Posts: 921

- Website

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi all, I can passthrough my VGA to VM successfully using both pci-assign and vfio-pci. What's the difference between these two methods?

pci-assign is effectively deprecated upstream. It has a poor device ownership model, it's a patchwork of security issues, it relies on userspace to protect the kernel against peer-to-peer isolation, and kvm would really like to get away from being a device driver and managing the iommu. vfio-pci is meant to replace it. Additionally, pci-assign doesn't actually have VGA support. You can only use it to assign a graphics card as a secondary device to the VM. If you can do this, great. It avoids a number of issues with VGA. vfio-pci should work equally well with that model, but also supports use as a primary VGA device with the x-vga=on option, which seems to enable quite a few more cards.

http://vfio.blogspot.com

Looking for a more open forum to discuss vfio related uses? Try https://www.redhat.com/mailman/listinfo/vfio-users

Offline

#708 2013-11-12 03:00:00

- a123

- Member

- Registered: 2013-11-05

- Posts: 2

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

a123 wrote:Hi all, I can passthrough my VGA to VM successfully using both pci-assign and vfio-pci. What's the difference between these two methods?

pci-assign is effectively deprecated upstream. It has a poor device ownership model, it's a patchwork of security issues, it relies on userspace to protect the kernel against peer-to-peer isolation, and kvm would really like to get away from being a device driver and managing the iommu. vfio-pci is meant to replace it. Additionally, pci-assign doesn't actually have VGA support. You can only use it to assign a graphics card as a secondary device to the VM. If you can do this, great. It avoids a number of issues with VGA. vfio-pci should work equally well with that model, but also supports use as a primary VGA device with the x-vga=on option, which seems to enable quite a few more cards.

Does VGA passthrough and GPU passthrough mean the same thing? Is there a way to show the display of video output by SDL or VNC without connectting a physical monitor to the passthroughed vga card?

Offline

#709 2013-11-12 03:08:08

- aw

- Member

- Registered: 2013-10-04

- Posts: 921

- Website

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

aw wrote:a123 wrote:Hi all, I can passthrough my VGA to VM successfully using both pci-assign and vfio-pci. What's the difference between these two methods?

pci-assign is effectively deprecated upstream. It has a poor device ownership model, it's a patchwork of security issues, it relies on userspace to protect the kernel against peer-to-peer isolation, and kvm would really like to get away from being a device driver and managing the iommu. vfio-pci is meant to replace it. Additionally, pci-assign doesn't actually have VGA support. You can only use it to assign a graphics card as a secondary device to the VM. If you can do this, great. It avoids a number of issues with VGA. vfio-pci should work equally well with that model, but also supports use as a primary VGA device with the x-vga=on option, which seems to enable quite a few more cards.

Does VGA passthrough and GPU passthrough mean the same thing? Is there a way to show the display of video output by SDL or VNC without connectting a physical monitor to the passthroughed vga card?

To me, VGA passthrough means you're actually making use of VGA, which means it's the primary VM display including VM BIOS output. GPU passthrough is without VGA, which mostly means a secondary VM display. GPU passthrough might be a primary display in a legacy-free environment (UEFI). VNC is only available using an in-band server (one running in the VM). Out-of-band SDL/VNC is only for emulated graphics.

http://vfio.blogspot.com

Looking for a more open forum to discuss vfio related uses? Try https://www.redhat.com/mailman/listinfo/vfio-users

Offline

#710 2013-11-12 10:41:33

- ilya80

- Member

- Registered: 2013-11-12

- Posts: 34

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi everyone!

I`m yet another guy extremely interested in passing through my GPU to get rid of dual-boot once & for all. Thanks a lot for working on this, the effort is very much appreciated.

Can someone please confirm that using the instructions provided in the first post should be enough to pass through a NVidia GTX 680 card? Previously for Xen this was possible only after hardmodding the card into "Multi-OS capable" GRID K2 or Quadro K5000 card.

Offline

#711 2013-11-12 14:38:09

- aw

- Member

- Registered: 2013-10-04

- Posts: 921

- Website

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi everyone!

I`m yet another guy extremely interested in passing through my GPU to get rid of dual-boot once & for all. Thanks a lot for working on this, the effort is very much appreciated.

Can someone please confirm that using the instructions provided in the first post should be enough to pass through a NVidia GTX 680 card? Previously for Xen this was possible only after hardmodding the card into "Multi-OS capable" GRID K2 or Quadro K5000 card.

It works with a GTX660 for me. Depending on whether your primary host graphics is Intel, you may need to patch the host kernel for i915 VGA arbiter fixes. Also if on an Intel system you'll need to patch QEMU to disable NoSnoop on the device. I have a plan for solving the latter problem more permanently, but it won't come until v3.13 and QEMU 1.8. The VGA arbiter problem is going to take longer.

http://vfio.blogspot.com

Looking for a more open forum to discuss vfio related uses? Try https://www.redhat.com/mailman/listinfo/vfio-users

Offline

#712 2013-11-12 14:45:38

- ilya80

- Member

- Registered: 2013-11-12

- Posts: 34

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

It works with a GTX660 for me. Depending on whether your primary host graphics is Intel, you may need to patch the host kernel for i915 VGA arbiter fixes. Also if on an Intel system you'll need to patch QEMU to disable NoSnoop on the device. I have a plan for solving the latter problem more permanently, but it won't come until v3.13 and QEMU 1.8. The VGA arbiter problem is going to take longer.

Thanks for reply, this gives me hope! I may get away without putting soldering iron to my GTX 680. Also KVM has a stronger appeal to me, I`ve used it in the past. I`m running Debian, but I don't see that as a show stopper; I`m also fine with patching the kernel and QEMU ( running with Intel HD 2500 as primary GPU ). I`ll definitely give it a shot.

Last edited by ilya80 (2013-11-12 14:56:37)

Offline

#713 2013-11-12 19:14:05

- cmdr

- Member

- Registered: 2013-08-01

- Posts: 8

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

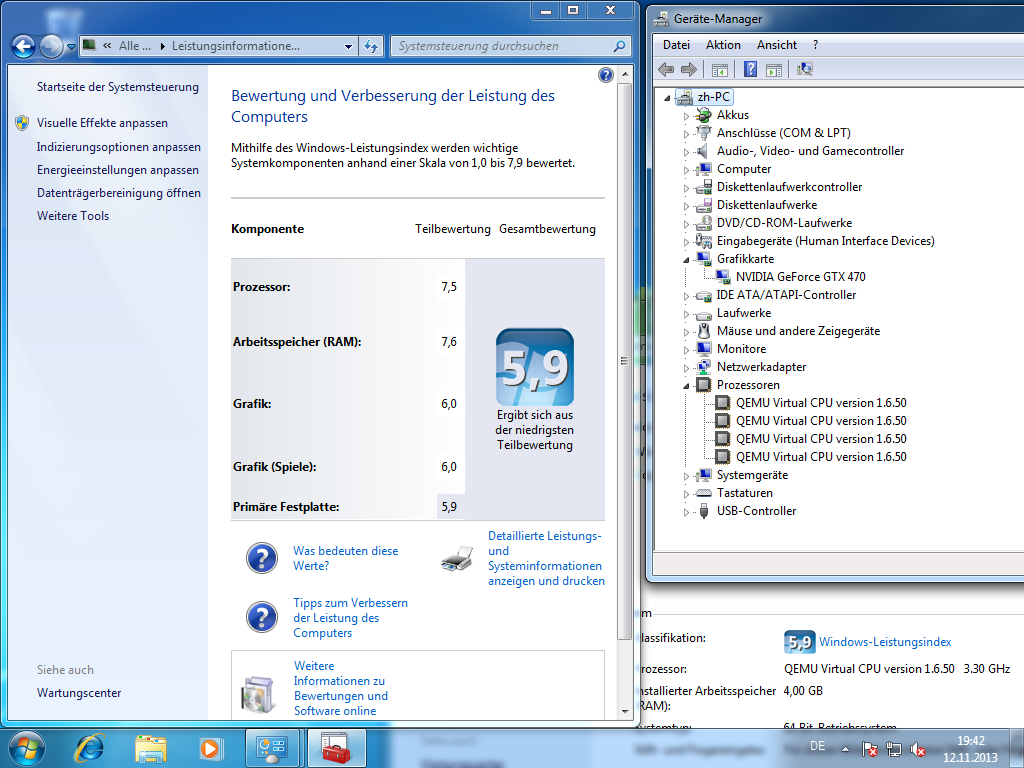

After some months and after getting a new GPU I wanted to try this again. This time I am using a AMD 7750 for the host and a NVIDIA GTX 470 for the guest.

When I tried to install windows 7 in my guest I got bsod showing "page fault in nonpaged area" but I solved that by changing "-cpu host" to "-cpu qemu64" (I think I only get bsod when I set memory higher than 1024 *confused*).

After lots of reboots I finally got what I wanted... ![]()

Thank you very much!

For the record:

I only installed the packages from the first post (qemu-git, linux-mainline, seabios) then added pci-id's to grub and blacklisted nouveau.

Xeon E3-1230v2

ASRock Fatal1ty Z77 professional

ATI Radeon 7750 (Host)

NVIDIA GTX-470 (Guest)

# cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-linux-mainline root=/dev/mapper/main-root rw cryptdevice=/dev/sda2:main lang=de locale=de_DE.UTF-8 quiet intel_iommu=on pcie_acs_override=downstream pci-stub.ids=1002:68f9,1002:aa68qemu-system-x86_64 -enable-kvm -M q35 -m 4096 -cpu qemu64 -smp 4,sockets=1,cores=4,threads=1 \

-bios /usr/share/qemu/bios.bin \

-vga none \

-device ioh3420,bus=pcie.0,addr=1c.0,multifunction=on,port=1,chassis=1,id=root.1 \

-device vfio-pci,host=02:00.0,bus=root.1,addr=00.0,multifunction=on,x-vga=on \

-device vfio-pci,host=02:00.1,bus=root.1,addr=00.1 \

-device ahci,bus=pcie.0,id=ahci \

-drive file=/home/zh32/MyMachines/windows2.img,id=disk,format=raw \

-device ide-hd,bus=ahci.0,drive=disk \

-drive file=/home/zh32/Images/bie764129g.iso,id=isocd \

-device ide-cd,bus=ahci.1,drive=isocd \

-boot menu=on \

-usb -usbdevice host:04f3:0210 -usbdevice host:05ac:1006 -usbdevice host:05ac:0221Offline

#714 2013-11-12 19:24:30

- nbhs

- Member

- From: Montevideo, Uruguay

- Registered: 2013-05-02

- Posts: 402

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

After some months and after getting a new GPU I wanted to try this again. This time I am using a AMD 7750 for the host and a NVIDIA GTX 470 for the guest.

When I tried to install windows 7 in my guest I got bsod showing "page fault in nonpaged area" but I solved that by changing "-cpu host" to "-cpu qemu64" (I think I only get bsod when I set memory higher than 1024 *confused*).

After lots of reboots I finally got what I wanted...Thank you very much!

Congrats, have you tried using -cpu SandyBridge ?

Offline

#715 2013-11-12 21:39:20

- blacky

- Member

- Registered: 2012-10-26

- Posts: 14

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi!

I’m currently stuck at the first qemu-run where I’m supposed to see the SeaBIOS-Output on my third screen (plugged into the dedicated graphics card). Actually it flickers every time I execute

qemu-system-x86_64 -enable-kvm -M q35 -m 1024 -cpu host -smp 6,sockets=1,cores=6,threads=1 -bios /usr/share/qemu/bios.bin -vga none -device ioh3420,bus=pcie.0,addr=1c.0,multifunction=on,port=1,chassis=1,id=root.1 -device vfio-pci,host=02:00.0,bus=root.1,addr=00.0,multifunction=on,x-vga=on -device vfio-pci,host=02:00.1,bus=root.1,addr=00.1which means, I guess, that the device is successfully reset. The third display is in standby when booting the host but actually stays on after I first executed qemu. Also the main screen on the IGP (but not the secondary) shows artifacts when starting qemu but I can get rid of them by changing to tty2 and back to X. There are no error messages whatsoever, qemu runs until I kill it. I also tried to use a ROM file for the VGA bios but that didn’t change anything.

My setup:

ASRock Z87 Extreme6

Core i4771

HD4850

The HD4850 is for testing only since I’m waiting for R9 290X with a reasonable custom cooling system.

dmesg (relevant info only):

255:[ 0.000000] Kernel command line: BOOT_IMAGE=/vmlinuz-linux-mainline root=/dev/mapper/-root rw cryptdevice=UUID=:_crypt intel_iommu=on pci-stub.ids=1002:9442,1002:aa30

256:[ 0.000000] Intel-IOMMU: enabled

315:[ 0.058278] dmar: IOMMU 0: reg_base_addr fed90000 ver 1:0 cap c0000020660462 ecap f0101a

317:[ 0.058282] dmar: IOMMU 1: reg_base_addr fed91000 ver 1:0 cap d2008020660462 ecap f010da

320:[ 0.058347] IOAPIC id 2 under DRHD base 0xfed91000 IOMMU 1

614:[ 0.667449] IOMMU 0 0xfed90000: using Queued invalidation

615:[ 0.667450] IOMMU 1 0xfed91000: using Queued invalidation

616:[ 0.667451] IOMMU: Setting RMRR:

617:[ 0.667459] IOMMU: Setting identity map for device 0000:00:02.0 [0xbf800000 - 0xcf9fffff]

621:[ 0.668724] IOMMU: Prepare 0-16MiB unity mapping for LPC

685:[ 0.696931] pci-stub: add 1002:9442 sub=FFFFFFFF:FFFFFFFF cls=00000000/00000000

686:[ 0.696936] pci-stub 0000:02:00.0: claimed by stub

687:[ 0.696940] pci-stub: add 1002:AA30 sub=FFFFFFFF:FFFFFFFF cls=00000000/00000000

688:[ 0.696943] pci-stub 0000:02:00.1: claimed by stub

1125: [ 3436.413121] vfio-pci 0000:02:00.0: enabling device (0000 -> 0003)/sys/

$> ls /sys/kernel/iommu_groups/1/devices

lrwxrwxrwx 1 root root 0 12. Nov 21:01 0000:00:01.0 -> ../../../../devices/pci0000:00/0000:00:01.0

lrwxrwxrwx 1 root root 0 12. Nov 21:01 0000:00:01.1 -> ../../../../devices/pci0000:00/0000:00:01.1

lrwxrwxrwx 1 root root 0 12. Nov 21:01 0000:02:00.0 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:02:00.0

lrwxrwxrwx 1 root root 0 12. Nov 21:01 0000:02:00.1 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:02:00.1

$> ls /sys/devices/pci0000:00/0000:00:01.1/0000:02:00.*

/sys/devices/pci0000:00/0000:00:01.1/0000:02:00.0:

lrwxrwxrwx 1 root root 0 12. Nov 20:23 driver -> ../../../../bus/pci/drivers/vfio-pci

lrwxrwxrwx 1 root root 0 12. Nov 21:01 iommu_group -> ../../../../kernel/iommu_groups/1

[…]

/sys/devices/pci0000:00/0000:00:01.1/0000:02:00.1:

lrwxrwxrwx 1 root root 0 12. Nov 20:26 driver -> ../../../../bus/pci/drivers/vfio-pci

lrwxrwxrwx 1 root root 0 12. Nov 21:01 iommu_group -> ../../../../kernel/iommu_groups/1

[…]

$> ls /sys/bus/pci/drivers/vfio-pci/

lrwxrwxrwx 1 root root 0 12. Nov 23:04 0000:02:00.0 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:02:00.0

lrwxrwxrwx 1 root root 0 12. Nov 23:04 0000:02:00.1 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:02:00.1

--w------- 1 root root 4,0K 12. Nov 23:04 bind

lrwxrwxrwx 1 root root 0 12. Nov 23:04 module -> ../../../../module/vfio_pci

--w------- 1 root root 4,0K 12. Nov 20:04 new_id

--w------- 1 root root 4,0K 12. Nov 23:04 remove_id

--w------- 1 root root 4,0K 12. Nov 20:04 uevent

--w------- 1 root root 4,0K 12. Nov 23:04 unbindlspci -v

02:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] RV770 [Radeon HD 4850] (prog-if 00 [VGA controller])

Subsystem: Advanced Micro Devices, Inc. [AMD/ATI] MSI Radeon HD 4850 512MB GDDR3

Flags: fast devsel, IRQ 17

Memory at e0000000 (64-bit, prefetchable) [size=256M]

Memory at f0020000 (64-bit, non-prefetchable) [size=64K]

I/O ports at e000 [size=256]

Expansion ROM at f0000000 [disabled] [size=128K]

Capabilities: [50] Power Management version 3

Capabilities: [58] Express Legacy Endpoint, MSI 00

Capabilities: [a0] MSI: Enable- Count=1/1 Maskable- 64bit+

Capabilities: [100] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Kernel driver in use: vfio-pci

Kernel modules: radeon

02:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] RV770 HDMI Audio [Radeon HD 4850/4870]

Subsystem: Advanced Micro Devices, Inc. [AMD/ATI] RV770 HDMI Audio [Radeon HD 4850/4870]

Flags: bus master, fast devsel, latency 0, IRQ 18

Memory at f0030000 (64-bit, non-prefetchable) [size=16K]

Capabilities: [50] Power Management version 3

Capabilities: [58] Express Legacy Endpoint, MSI 00

Capabilities: [a0] MSI: Enable- Count=1/1 Maskable- 64bit+

Capabilities: [100] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Kernel driver in use: vfio-pci

Kernel modules: snd_hda_intelUnfortunately I cannot use another card since at least the first two PCIe-Ports are in the same iommu group.

I used linux-mainline (AUR), qemu-git (AUR) and seabios (from the first post).

Now I’m a bit stuck because I don’t see any error messages I could relate to this.

The following seems to be more of an (unrelated) IGP problem.

kernel: [drm:ring_stuck] *ERROR* Kicking stuck wait on blitter ring

kernel: [drm] no progress on blitter ring

kernel: [drm:i915_set_reset_status] *ERROR* blitter ring hung flushing bo (0x8aa000 ctx 0) at 0xfac

kernel: [drm] stuck on render ring

kernel: [drm:i915_set_reset_status] *ERROR* render ring hung inside bo (0xa309000 ctx 1) at 0xa3092d4

kernel: [drm:ring_stuck] *ERROR* Kicking stuck wait on blitter ringCan you probably give me a hint where to look?

Last edited by blacky (2013-11-12 22:09:47)

Offline

#716 2013-11-12 22:16:54

- nbhs

- Member

- From: Montevideo, Uruguay

- Registered: 2013-05-02

- Posts: 402

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Can you probably give me a hint where to look?

Use the packages i provided instead of the AUR ones

Offline

#717 2013-11-12 22:17:55

- ilya80

- Member

- Registered: 2013-11-12

- Posts: 34

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi!

I’m currently stuck at the first qemu-run where I’m supposed to see the SeaBIOS-Output on my third screen (plugged into the dedicated graphics card). Actually it flickers every time I execute

......

Can you probably give me a hint where to look?

Hi!

I guess your problems should be solved with the advice AW gave me:

"Depending on whether your primary host graphics is Intel, you may need to patch the host kernel for i915 VGA arbiter fixes. Also if on an Intel system you'll need to patch QEMU to disable NoSnoop on the device"

Links to the mentioned patches are in the updated first post of the topic. Do you have those patches already adopted?

Offline

#718 2013-11-12 22:20:20

- blacky

- Member

- Registered: 2012-10-26

- Posts: 14

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Use the packages i provided instead of the AUR ones

I thought that those were o.k. too since you linked them

qemu-git.tar.gz (includes NoSnoop patch) OR qemu-git from AUR

seabios.tar.gz (1.7.3.2)

linux-mainline.tar.gz (3.12.0 includes acs override patch and i915 fixes) OR linux-mainline from AUR

But of course I’ll follow your advise, thanks!

@ilya80: Oh, ok, didn’t catch that.

Offline

#719 2013-11-12 22:28:25

- aw

- Member

- Registered: 2013-10-04

- Posts: 921

- Website

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

[snip] Also the main screen on the IGP (but not the secondary) shows artifacts when starting qemu but I can get rid of them by changing to tty2 and back to X.

sounds like lack of i915 VGA arbiter patch

[snip]

The following seems to be more of an (unrelated) IGP problem.kernel: [drm:ring_stuck] *ERROR* Kicking stuck wait on blitter ring kernel: [drm] no progress on blitter ring kernel: [drm:i915_set_reset_status] *ERROR* blitter ring hung flushing bo (0x8aa000 ctx 0) at 0xfac kernel: [drm] stuck on render ring kernel: [drm:i915_set_reset_status] *ERROR* render ring hung inside bo (0xa309000 ctx 1) at 0xa3092d4 kernel: [drm:ring_stuck] *ERROR* Kicking stuck wait on blitter ringCan you probably give me a hint where to look?

very related. As others noted, mainline doesn't work for this yet.

http://vfio.blogspot.com

Looking for a more open forum to discuss vfio related uses? Try https://www.redhat.com/mailman/listinfo/vfio-users

Offline

#720 2013-11-13 00:33:44

- cmdr

- Member

- Registered: 2013-08-01

- Posts: 8

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

-snip-

Congrats, have you tried using -cpu SandyBridge ?

Yes, I just reinstalled the machine using '-cpu SandyBridge' and with a own sata-controller. Works fine.

Offline

#721 2013-11-13 19:10:41

- mafferri

- Member

- Registered: 2013-11-11

- Posts: 7

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Binding a device to vfio-pci

Assuming the kernel, qemu and seabios are built and working, lets bind some devices.

You can use this script to make life easier:#!/bin/bash modprobe vfio-pci for dev in "$@"; do vendor=$(cat /sys/bus/pci/devices/$dev/vendor) device=$(cat /sys/bus/pci/devices/$dev/device) if [ -e /sys/bus/pci/devices/$dev/driver ]; then echo $dev > /sys/bus/pci/devices/$dev/driver/unbind fi echo $vendor $device > /sys/bus/pci/drivers/vfio-pci/new_id doneSave it as /usr/bin/vfio-bind

chmod 755 /usr/bin/vfio-bindBind the GPU:

vfio-bind 0000:07:00.0 0000:07:00.1cat /etc/vfio-pci.cfg

DEVICES="0000:00:11.0 0000:04:00.0 0000:05:00.0 0000:06:00.0 0000:07:00.0 000:07:00.1"GL!

quoto this because I wish I could do the reverse, for when I shutdown the vm

because if I restart the vm I have very bad performance

I have to reboot the host because everything works well

Offline

#722 2013-11-13 19:24:21

- aw

- Member

- Registered: 2013-10-04

- Posts: 921

- Website

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

quoto this because I wish I could do the reverse, for when I shutdown the vm

because if I restart the vm I have very bad performance

I have to reboot the host because everything works well

You can do the reverse. Here are the scripts I use:

vfio-group <group #>:

#!/bin/sh

if [ ! -e /sys/kernel/iommu_groups/$1 ]; then

echo "IOMMU group $1 not found"

exit 1

fi

if [ ! -e /sys/bus/pci/drivers/vfio-pci ]; then

sudo modprobe vfio-pci

fi

for i in $(ls /sys/kernel/iommu_groups/$1/devices/); do

if [ -e /sys/kernel/iommu_groups/$1/devices/$i/driver ]; then

if [ "$(basename $(readlink -f \

/sys/kernel/iommu_groups/$1/devices/$i/driver))" != \

"pcieport" ]; then

echo $i | sudo tee \

/sys/kernel/iommu_groups/$1/devices/$i/driver/unbind

fi

fi

done

for i in $(ls /sys/kernel/iommu_groups/$1/devices/); do

if [ ! -e /sys/kernel/iommu_groups/$1/devices/$i/driver ]; then

VEN=$(cat /sys/kernel/iommu_groups/$1/devices/$i/vendor)

DEV=$(cat /sys/kernel/iommu_groups/$1/devices/$i/device)

echo $VEN $DEV | sudo tee \

/sys/bus/pci/drivers/vfio-pci/new_id

fi

donevfio-ungroup <group #>

#!/bin/sh

if [ ! -e /sys/kernel/iommu_groups/$1 ]; then

echo "IOMMU group $1 not found"

exit 1

fi

for i in $(ls /sys/kernel/iommu_groups/$1/devices/); do

VEN=$(cat /sys/kernel/iommu_groups/$1/devices/$i/vendor)

DEV=$(cat /sys/kernel/iommu_groups/$1/devices/$i/device)

echo $VEN $DEV | sudo tee \

/sys/bus/pci/drivers/vfio-pci/remove_id

echo $i | sudo tee \

/sys/kernel/iommu_groups/$1/devices/$i/driver/unbind

done

for i in $(ls /sys/kernel/iommu_groups/$1/devices/); do

echo $i | sudo tee /sys/bus/pci/drivers_probe

donelsgroup

#!/bin/sh

BASE="/sys/kernel/iommu_groups"

for i in $(find $BASE -maxdepth 1 -mindepth 1 -type d); do

GROUP=$(basename $i)

echo "### Group $GROUP ###"

for j in $(find $i/devices -type l); do

DEV=$(basename $j)

echo -n " "

lspci -s $DEV

done

doneI'm not sure how this is going to help your performance though, it's pretty random whether re-loading the host driver is going to resolve anything that the guest driver can't. For graphics devices, if you're using kernel+qemu that support bus reset, that should provide something nearly as good as a host reboot.

http://vfio.blogspot.com

Looking for a more open forum to discuss vfio related uses? Try https://www.redhat.com/mailman/listinfo/vfio-users

Offline

#723 2013-11-13 20:59:37

- Val532

- Member

- Registered: 2013-11-13

- Posts: 35

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi

First i Thank you for the tutorial.

I'm able to use two or three VM with reel GPU.

But i have a problem i want to use Virt Manager to start, stop, wake or add new USB passthroug but i'm not able to do that because of the difrence between qemu args and virt manager xml config.

Does any body have more luck with that ?

I'm on Ubuntu 13.10 with 3.12 kernel (with patch) qemu master (with patch).

Thanks

PS: I'm french

Offline

#724 2013-11-13 21:02:41

- nbhs

- Member

- From: Montevideo, Uruguay

- Registered: 2013-05-02

- Posts: 402

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

Hi

First i Thank you for the tutorial.

I'm able to use two or three VM with reel GPU.

But i have a problem i want to use Virt Manager to start, stop, wake or add new USB passthroug but i'm not able to do that because of the difrence between qemu args and virt manager xml config.

Does any body have more luck with that ?

I'm on Ubuntu 13.10 with 3.12 kernel (with patch) qemu master (with patch).

Thanks

PS: I'm french

See this post by kaeptnb: https://bbs.archlinux.org/viewtopic.php … 0#p1343400

Would you mind posting your hardware specs?

Last edited by nbhs (2013-11-13 21:08:44)

Offline

#725 2013-11-13 21:30:10

- Val532

- Member

- Registered: 2013-11-13

- Posts: 35

Re: KVM VGA-Passthrough using the new vfio-vga support in kernel =>3.9

So i made some test with this methode but no luck. Virt Manager say to me a lot of difrente error.

Sometime AppArmor error, sometime connection reset by peer.

My Hardware is:

Corei5 4670S so HD4600 for the host

24Go ram (3*8Go)

Asrock Z87 Extrem 6

AMD HD7770 for the first VM

AMD HD5570 for the second VM

AMD HD5570 fot the third Vm

All of the graphic card work.

For the moment i've only Windows 7 on the first VM, the seconde and third come later.

Offline