You are not logged in.

- Topics: Active | Unanswered

#1 2022-12-15 20:32:59

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

[RESOLVED] Dying SSD?

Hi!

Two days ago the CPU suddenly jumped to 100 % and the laptop (T480, almost always mounted below the table / not touched) froze.

After a couple of minutes of waiting I turned it off. Then it wouldnt boot for a while (staying at the Lenovo boot logo longer than I was patient).

Either the laptop or my patience decided otherwise and it finally booted again.

Now once in a while I get a 100 % CPU freeze-spike again for a few seconds but than it recovered (for now).

Within the log, I found:

Dec 15 21:15:40 hostname kernel: nvme nvme0: I/O 258 QID 1 timeout, reset controller

Dec 15 21:15:40 hostname kernel: nvme0n1: Read(0x2) @ LBA 588873392, 64 blocks, Host Aborted Command (sct 0x3 / sc 0x71)

Dec 15 21:15:40 hostname kernel: I/O error, dev nvme0n1, sector 588873392 op 0x0:(READ) flags 0x80700 phys_seg 5 prio class 2

Dec 15 21:15:40 hostname kernel: nvme0n1: Read(0x2) @ LBA 588873456, 256 blocks, Host Aborted Command (sct 0x3 / sc 0x71)

Dec 15 21:15:40 hostname kernel: I/O error, dev nvme0n1, sector 588873456 op 0x0:(READ) flags 0x80700 phys_seg 17 prio class 2

Dec 15 21:15:40 hostname kernel: nvme0n1: Read(0x2) @ LBA 55147552, 256 blocks, Host Aborted Command (sct 0x3 / sc 0x71)

Dec 15 21:15:40 hostname kernel: I/O error, dev nvme0n1, sector 55147552 op 0x0:(READ) flags 0x80700 phys_seg 31 prio class 2I don't understand the s.m.a.r.t. output:

=== START OF SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

SMART/Health Information (NVMe Log 0x02)

Critical Warning: 0x00

Temperature: 31 Celsius

Available Spare: 100%

Available Spare Threshold: 3%

Percentage Used: 2%

Data Units Read: 9,456,445 [4.84 TB]

Data Units Written: 14,101,703 [7.22 TB]

Host Read Commands: 132,334,354

Host Write Commands: 359,438,467

Controller Busy Time: 1,390

Power Cycles: 2,638

Power On Hours: 2,426

Unsafe Shutdowns: 1,029

Media and Data Integrity Errors: 0

Error Information Log Entries: 545

Warning Comp. Temperature Time: 0

Critical Comp. Temperature Time: 0

Temperature Sensor 1: 0 Celsius

Temperature Sensor 2: 31 Celsius

Temperature Sensor 3: 37 Celsius

Error Information (NVMe Log 0x01, 4 of 4 entries)

Num ErrCount SQId CmdId Status PELoc LBA NSID VS

0 545 0 0xa003 0xc005 0x000 0 0 -

1 544 0 0x0008 0xc005 0x000 0 0 -

2 543 0 0x0011 0xc005 0x000 0 0 -

3 542 0 0x0162 0xc004 0x000 0 0 -All the bios quick-tests passed.

The question now is: Whats going on? Is my SSD dying?

Thanks a lot for any ideas!

Last edited by smoneck (2023-01-07 10:29:22)

Offline

#2 2022-12-16 03:12:33

- cfr

- Member

- From: Cymru

- Registered: 2011-11-27

- Posts: 7,168

Re: [RESOLVED] Dying SSD?

Back up your data.

Error Information Log Entries: 545 ... Error Information (NVMe Log 0x01, 4 of 4 entries) Num ErrCount SQId CmdId Status PELoc LBA NSID VS 0 545 0 0xa003 0xc005 0x000 0 0 - 1 544 0 0x0008 0xc005 0x000 0 0 - 2 543 0 0x0011 0xc005 0x000 0 0 - 3 542 0 0x0162 0xc004 0x000 0 0 -

Some SSDs, including yours, provide minimal useful SMART data, but those errors are Not Good News. A healthy drive would log 0 errors. Yours has logged 545.

You also have a surprisingly high number of unsafe shutdowns relative to power-on hours and power cycles. However, I don't know how reliable that reading is for comparing results from different drives. It just looks rather high to me. In any case, that is a secondary issue. The errors are the only thing worth looking at in this output.

Last edited by cfr (2022-12-16 03:14:36)

CLI Paste | How To Ask Questions

Arch Linux | x86_64 | GPT | EFI boot | refind | stub loader | systemd | LVM2 on LUKS

Lenovo x270 | Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz | Intel Wireless 8265/8275 | US keyboard w/ Euro | 512G NVMe INTEL SSDPEKKF512G7L

Offline

#3 2022-12-16 08:11:57

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

Not sure how to read your boot timeouts (ie. what was happening there, esp. if you preserve the vendor logo for a silent boot) but see https://wiki.archlinux.org/title/Solid_ … ST_support

Offline

#4 2022-12-17 11:12:51

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

Back up your data.

I have a backup but I have never been dependent on it. There are always the questions "Will it work?" and "Is it complete?".

Two questions I can just answer in hindsight.

Some SSDs, including yours, provide minimal useful SMART data, but those errors are Not Good News. A healthy drive would log 0 errors. Yours has logged 545.

One short hang apparently seems to increment each number by one. I guess that is not a good sign.

You also have a surprisingly high number of unsafe shutdowns relative to power-on hours and power cycles. However, I don't know how reliable that reading is for comparing results from different drives. It just looks rather high to me. In any case, that is a secondary issue. The errors are the only thing worth looking at in this output.

This seems to be realistic. The laptop often does not power off when it should. It then remains within some eternal limbus state spinning fan and sucking power.

(Fun fact: Before the firmware update it even did so appearing powered off but sucking the battery within half a day.)

I had a look at the SSD and it is so properly screwed in place that I can rule out any connectivity issues.

BUT there was some tape on the inside of the metal container that produced some grease(?) that also now sticks on the SSD.

I'm not sure what the purpose of this strip is (thermal or shock moderation) but that's something I need to tackle when swapping the drive.

https://i.imgur.com/DB2g0js.jpeg

https://i.imgur.com/8GNDW9l.jpeg

Mod Edit - Replaced oversized images with links.

CoC - Pasting pictures and code

Last edited by Slithery (2022-12-17 11:38:28)

Offline

#5 2022-12-17 14:10:37

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

Edit: looks like a heatpad to avoid an air cushion between the dies and the case (still air is a great isolator that will keep the dies neatly warm … until they boil)

The fact that it has molten (at least from what I can tell from the photo) tells you that there was some serious local heat.

Re-install it as good as you can.

Last edited by seth (2022-12-17 14:13:41)

Offline

#6 2022-12-18 00:47:53

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

First of all thank you for all the feedback so far!

The fact that it has molten (at least from what I can tell from the photo) tells you that there was some serious local heat.

Shouldn't there be a thermal throttling? I was wondering if maybe simply the plastic was breaking down and releasing the plasticizers.

In particular, given the context of the thermal pad, I'll slowly move up the state scale as 6.50W in my case is pretty much power in those chips.

But seeing the s.m.a.r.t. values rise with every hang, I meanwhile doubt that it is possible to be a driver issue.

Offline

#7 2022-12-18 02:00:34

- cfr

- Member

- From: Cymru

- Registered: 2011-11-27

- Posts: 7,168

Re: [RESOLVED] Dying SSD?

But seeing the s.m.a.r.t. values rise with every hang, I meanwhile doubt that it is possible to be a driver issue.

Might you get that from the bug seth mentioned? It would be worth adding

nvme_core.default_ps_max_latency_us=0to your kernel parameters and seeing if that (1) stops the hangs and (2) stops the errors rising in the SMART data.

However, if this is a new issue (as your first post seemed to indicate) and it worked fine before, it may be less likely. But I would still try the parameter. I just wouldn't trust the drive meanwhile with anything you don't have backed up.

CLI Paste | How To Ask Questions

Arch Linux | x86_64 | GPT | EFI boot | refind | stub loader | systemd | LVM2 on LUKS

Lenovo x270 | Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz | Intel Wireless 8265/8275 | US keyboard w/ Euro | 512G NVMe INTEL SSDPEKKF512G7L

Offline

#8 2022-12-18 08:57:16

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

I was wondering if maybe simply the plastic was breaking down and releasing the plasticizers.

Is the pad cushy/bouncy/springy and maybe somewhat sticky or just hard plastic?

Offline

#9 2022-12-18 14:20:59

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

I was wondering if maybe simply the plastic was breaking down and releasing the plasticizers.

Is the pad cushy/bouncy/springy and maybe somewhat sticky or just hard plastic?

Soft, cushy and sticky.

Might you get that from the bug seth mentioned?

Just my gut feeling. From my understanding / "feeling", internal device error values should be independent on external connectivity issues. That should go one step up to the next abstraction level.

It would be worth adding

nvme_core.default_ps_max_latency_us=0to your kernel parameters and seeing if that (1) stops the hangs and (2) stops the errors rising in the SMART data.

I was reluctant since that -- according to the smartctl-output -- means dissipating 6.5 W on the chip on the background of a possible heat problem.

But I now added it anyway. Now I found the following:

Dec 18 15:02:33 hostname kernel: nvme nvme0: I/O 364 (Read) QID 1 timeout, aborting

Dec 18 15:02:44 hostname kernel: nvme nvme0: I/O 269 (Read) QID 3 timeout, aborting

Dec 18 15:02:44 hostname kernel: nvme nvme0: I/O 270 (Read) QID 3 timeout, aborting

Dec 18 15:02:44 hostname kernel: nvme nvme0: I/O 271 (Read) QID 3 timeout, aborting

Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0

Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0

Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0

Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0

Dec 18 15:03:03 hostname kernel: nvme nvme0: I/O 364 QID 1 timeout, reset controller

Dec 18 15:03:03 hostname kernel: nvme0n1: Read(0x2) @ LBA 34638848, 32 blocks, Host Aborted Command (sct 0x3 / sc 0x71)

Dec 18 15:03:03 hostname kernel: I/O error, dev nvme0n1, sector 34638848 op 0x0:(READ) flags 0x80700 phys_seg 4 prio class 2

Dec 18 15:03:03 hostname kernel: nvme0n1: Read(0x2) @ LBA 34638888, 8 blocks, Host Aborted Command (sct 0x3 / sc 0x71)

Dec 18 15:03:03 hostname kernel: I/O error, dev nvme0n1, sector 34638888 op 0x0:(READ) flags 0x80700 phys_seg 1 prio class 2

Dec 18 15:03:03 hostname kernel: nvme0n1: Read(0x2) @ LBA 34638904, 16 blocks, Host Aborted Command (sct 0x3 / sc 0x71)

Dec 18 15:03:03 hostname kernel: I/O error, dev nvme0n1, sector 34638904 op 0x0:(READ) flags 0x80700 phys_seg 2 prio class 2

Dec 18 15:03:04 hostname kernel: nvme nvme0: failed to set APST feature (16386)

Dec 18 15:03:04 hostname kernel: nvme nvme0: 8/0/0 default/read/poll queuesOffline

#10 2022-12-18 14:50:05

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

Soft, cushy and sticky.

That's a heat pad - it's hopefully back in place and somewhat sticks to the chip that has (had) the gunk on it?

Theoretically these pads are not re-usable, but it's certainly better than nothing.

On the OP - does it help if you blow a lot of cold air against the NVME?

Is it maybe unfortunately placed in the heat radiation of other HW (eg. GPU and CPU will get MUCH hotter than is good for an NVME and in the worst case scenario, case and heatpad add to the chip temperature)

Offline

#11 2022-12-18 15:05:03

- cfr

- Member

- From: Cymru

- Registered: 2011-11-27

- Posts: 7,168

Re: [RESOLVED] Dying SSD?

Just my gut feeling. From my understanding / "feeling", internal device error values should be independent on external connectivity issues. That should go one step up to the next abstraction level.

That's what I thought, too, but I'm not confident I understand how SMART works with nvme devices (even though I'm using one).

Dec 18 15:02:33 hostname kernel: nvme nvme0: I/O 364 (Read) QID 1 timeout, aborting Dec 18 15:02:44 hostname kernel: nvme nvme0: I/O 269 (Read) QID 3 timeout, aborting Dec 18 15:02:44 hostname kernel: nvme nvme0: I/O 270 (Read) QID 3 timeout, aborting Dec 18 15:02:44 hostname kernel: nvme nvme0: I/O 271 (Read) QID 3 timeout, aborting Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0 Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0 Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0 Dec 18 15:02:44 hostname kernel: nvme nvme0: Abort status: 0x0 Dec 18 15:03:03 hostname kernel: nvme nvme0: I/O 364 QID 1 timeout, reset controller Dec 18 15:03:03 hostname kernel: nvme0n1: Read(0x2) @ LBA 34638848, 32 blocks, Host Aborted Command (sct 0x3 / sc 0x71) Dec 18 15:03:03 hostname kernel: I/O error, dev nvme0n1, sector 34638848 op 0x0:(READ) flags 0x80700 phys_seg 4 prio class 2 Dec 18 15:03:03 hostname kernel: nvme0n1: Read(0x2) @ LBA 34638888, 8 blocks, Host Aborted Command (sct 0x3 / sc 0x71) Dec 18 15:03:03 hostname kernel: I/O error, dev nvme0n1, sector 34638888 op 0x0:(READ) flags 0x80700 phys_seg 1 prio class 2 Dec 18 15:03:03 hostname kernel: nvme0n1: Read(0x2) @ LBA 34638904, 16 blocks, Host Aborted Command (sct 0x3 / sc 0x71) Dec 18 15:03:03 hostname kernel: I/O error, dev nvme0n1, sector 34638904 op 0x0:(READ) flags 0x80700 phys_seg 2 prio class 2 Dec 18 15:03:04 hostname kernel: nvme nvme0: failed to set APST feature (16386) Dec 18 15:03:04 hostname kernel: nvme nvme0: 8/0/0 default/read/poll queues

![]()

CLI Paste | How To Ask Questions

Arch Linux | x86_64 | GPT | EFI boot | refind | stub loader | systemd | LVM2 on LUKS

Lenovo x270 | Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz | Intel Wireless 8265/8275 | US keyboard w/ Euro | 512G NVMe INTEL SSDPEKKF512G7L

Offline

#12 2022-12-18 15:43:59

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

Afaiu the latency issue it induces an actual controller failure (ie. this is not a problem in the kernel or the bus, but in the nvme) and would expect that to get logged by the device.

Dec 18 15:03:04 hostname kernel: nvme nvme0: failed to set APST feature (16386)Are we sure the parameter has been applied?

How?

cat /proc/cmdlineOffline

#13 2022-12-18 15:56:11

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

How?

cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-linux root=/dev/mapper/vg0-arch rw cryptdevice=/dev/nvme0n1p2:luks:allow-discards psmouse.synaptics_intertouch=0 modprobe.blacklist=nouveau i915.enable_psr=1 iwlwifi.power_save=Y iwldvm.force_cam=N i915.enable_fbc=1 nvme_core.default_ps_max_latency_us=0 pcie_aspm=off loglevel=3 quietOffline

#14 2022-12-18 16:04:55

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

So unfortunately "yes", is "pcie_aspm=off" a mitigational effort or for different reasons?

Offline

#15 2022-12-18 16:05:42

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

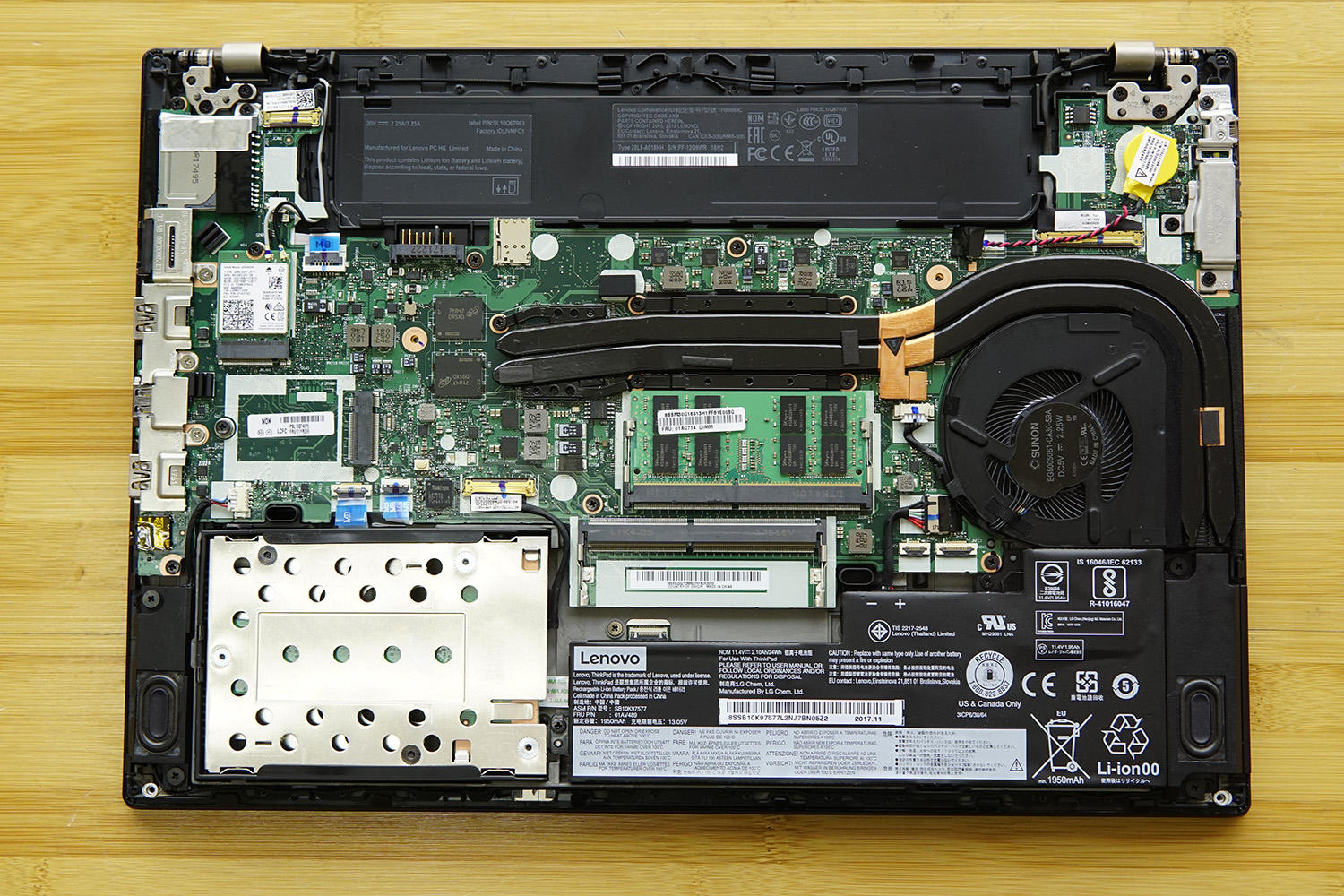

This is a similar version:

The metal case on the bottom left:

and opened up:

That's a heat pad - it's hopefully back in place and somewhat sticks to the chip that has (had) the gunk on it?

Yeah, it sticks to the metal, not the chip. So it should be as it was before.

Is it maybe unfortunately placed in the heat radiation of other HW (eg. GPU and CPU will get MUCH hotter than is good for an NVME and in the worst case scenario, case and heatpad add to the chip temperature)

To my muggle eyes, the thermal construction looks good.

Offline

#16 2022-12-18 16:13:24

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

So unfortunately "yes", is "pcie_aspm=off" a mitigational effort or for different reasons?

I added it as a try to handle this very message as we wanted to disable power-management anyway. It's removed now and ... so far no message anymore. Aha. Hm

Offline

#17 2022-12-18 16:53:53

- cfr

- Member

- From: Cymru

- Registered: 2011-11-27

- Posts: 7,168

Re: [RESOLVED] Dying SSD?

So is the SMART error count no longer increasing?

CLI Paste | How To Ask Questions

Arch Linux | x86_64 | GPT | EFI boot | refind | stub loader | systemd | LVM2 on LUKS

Lenovo x270 | Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz | Intel Wireless 8265/8275 | US keyboard w/ Euro | 512G NVMe INTEL SSDPEKKF512G7L

Offline

#18 2022-12-18 17:18:39

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

So is the SMART error count no longer increasing?

It's too early to say that. It used to be +1 or +2 a day. For the record, the current state is:

Num ErrCount SQId CmdId Status PELoc LBA NSID VS

0 554 0 0x5011 0xc005 0x000 0 0 -

1 553 0 0x000c 0xc005 0x000 0 0 -

2 552 0 0xf001 0xc005 0x000 0 0 -

3 551 0 0x5001 0xc005 0x000 0 0 -I'll now follow it closely and report back.

Offline

#19 2022-12-27 18:33:16

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

I'll now follow it closely and report back.

I haven't been using this computer much since then and no more errors appeared within the journal but the s.m.a.r.t. errors appear to keep rising.

Num ErrCount SQId CmdId Status PELoc LBA NSID VS

0 561 0 0x5018 0xc005 0x000 0 0 -

1 560 0 0x400f 0xc005 0x000 0 0 -

2 559 0 0x0000 0xc005 0x000 0 0 -

3 558 0 0x0005 0xc005 0x000 0 0 -Last edited by smoneck (2022-12-27 18:33:52)

Offline

#20 2022-12-27 20:50:37

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

nvme_core.default_ps_max_latency_us=0 is still in place?

Have you checked the temperature of the nvme under some overall system load? (cpu, gpu and nvme)

Offline

#21 2022-12-27 22:27:27

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

nvme_core.default_ps_max_latency_us=0 is still in place?

Have you checked the temperature of the nvme under some overall system load? (cpu, gpu and nvme)

nvme_core.default_ps_max_latency_us=0 is still in place and I haven't experienced any hick-ups anymore.

So far, the temperature looks good but I can't say anything about workloads at the moment as I was just doing light vim-typing.

Temperature Sensor 1 : 46°C (319 Kelvin)

Temperature Sensor 2 : 37°C (310 Kelvin)

Temperature Sensor 3 : 37°C (310 Kelvin)Judging from the inside of the laptop, it looks well enough constructed to me that cpu / gpu heat should not be an issue.

I'm gonna work from home tomorrow and I started logging the temperature sensors every minute.

Let's see, what comes out of it ... :-)

Last edited by smoneck (2022-12-27 22:27:52)

Offline

#22 2022-12-28 08:00:48

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

I haven't experienced any hick-ups anymore.

The nvme errors may be meaningless, https://www.smartmontools.org/wiki/NVMe_Support (look at the bottom example)

Offline

#23 2023-01-07 10:25:47

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

The nvme errors may be meaningless, https://www.smartmontools.org/wiki/NVMe_Support (look at the bottom example)

I'm not sure what you're basing that on.

The man-page of smartctl states

error - [ATA] prints the Summary SMART error log. SMART disks maintain a log of the most recent five non-trivial er‐

rors. For each of these errors, the disk power-on lifetime at which the error occurred is recorded, as is the device

status (idle, standby, etc) at the time of the error. For some common types of errors, the Error Register (ER) and

Status Register (SR) values are decoded and printed as text. The meanings of these are:

[...]

In addition, up to the last five commands that preceded the error are listed, along with a timestamp measured from the

start of the corresponding power cycle.I therefore suspect that in the example simply the last N errors are logged without any further information about their severity / type.

I have now looked at the history of temperature (sensors exposed in /sys/class/nvme/nvme0) and errors over the last few days. The vertical lines in gray mark the boots.

1) I do not see any critical temperatures (okay, we have winter here ...) - i.e. I guess the pad is leaking plasticizers.

2) The error counter seems to behave identically to the boot counts, and I can't see the times in the data where I turned it off hard (because it wasn't powering off porperly). I still have no idea what the counter means but in the meantime I am reasonably reassured that it is nothing serious. However, the next two months I have so much to do that I won't get to play with the mentioned qemu-fork.

Since adding `nvme_core.default_ps_max_latency_us=0` I haven't had any more problems with hangers and the battery consumption doesn't seem to have increased dramatically either.

Thank you so much for your support!

Last edited by smoneck (2023-01-07 10:34:23)

Offline

#24 2023-01-07 10:41:55

- seth

- Member

- From: Don't DM me only for attention

- Registered: 2012-09-03

- Posts: 72,262

Re: [RESOLVED] Dying SSD?

The nvme errors may be meaningless

Those "errors" are common and all over the place and not necessarily an indicator for problems.

https://archlinux.org/packages/communit … /nvme-cli/ might provide better insight.

sudo nvme error-log /dev/nvme0Offline

#25 2023-01-07 11:11:37

- smoneck

- Member

- Registered: 2014-05-14

- Posts: 105

Re: [RESOLVED] Dying SSD?

sudo nvme error-log /dev/nvme0

No new insights for me. ![]()

> sudo nvme error-log /dev/nvme0 | rg 'error_count|status_field'

error_count : 573

status_field : 0x6002(Invalid Field in Command: A reserved coded value or an unsupported value in a defined field)

error_count : 572

status_field : 0x6002(Invalid Field in Command: A reserved coded value or an unsupported value in a defined field)

error_count : 571

status_field : 0x6002(Invalid Field in Command: A reserved coded value or an unsupported value in a defined field)

error_count : 570

status_field : 0x6002(Invalid Field in Command: A reserved coded value or an unsupported value in a defined field)Offline